October 13, 2025

Opulent OS Heavy — Get Started

Ship agents that finish the job. Heavy gives you context guardrails, tool hygiene, and verification loops—so long runs end in working artifacts.

Why Heavy?

Agents stall from context blowups, tool thrash, and missing self-checks. Opulent OS Heavy tackles these pain points head-on.

Heavy shows the right facts at the right time—load → compress → isolate. The result: runs that finish.

Heavy's promise: Agents built on Heavy write → select → compress → isolate context; choose the right tools; and verify their work—so they can take on multi-hour tasks and still deliver production-ready artifacts.

Try it now: run a 10-minute starter (Automated PR Review) below →

Frontier Model Support: Performance at Scale

Heavy works with Claude, GPT-4, Gemini, DeepSeek—and gets more from the same model via planning, codebase knowledge, and verification.

Recent Sonnet-4.5 shifts pushed Heavy ~2× faster and +12% on internal Junior Developer evals—architecture mattered as much as the model swap.

Token Guardrails: 80%: summarize. 90%: snapshot. Keep only essentials; move details to memory.

Parallel vs Sequential: Parallel early, serialize late: speed helps when context is empty; it burns tokens fast near the limit.

Confidence‑Aware Pauses: Obey model confidence (🟢/🟡/🔴): auto‑proceed when high, pause for approval when low.

GDPval: Real-World Economic Value

We measure real work: tasks with files, context, and deliverables.

Case studies: Teams ship multi-repo features the same day and automate repeatable work (analytics instrumentation, PRs, CI/CD migrations).

Scaling AI Workforces: Heavy's durable orchestration enables organizations to deploy multiple agents simultaneously—each handling distinct professional tasks with isolated context and dedicated tool access.

Core Capabilities

Heavy provides five pillars that enable agents to work autonomously and finish production-grade work.

Smart Context Engineering

Load what matters. Summarize before it hurts. Keep runs clear over hundreds of turns.

Authoritative memories: scratchpad (now), episodic (wins), procedural (checklists). At 80%→compress; 90%→snapshot/spawn.

Ergonomic Tools

Tools are designed for LLM cognition—named semantically, with concise/detailed modes and clear namespaces—so agents don't hallucinate or misidentify operations. Purpose-built with semantic identifiers over UUIDs and namespaced boundaries that reduce hallucinations.

MCP Marketplace: Plug MCP servers (Datadog, Sentry, Figma, Airtable) with least-privilege scopes.

DeepWiki-class codebase Q&A: Index repos; ask, cite, and navigate without reading every file.

Memory Systems

Scratchpads persist current session state, episodic memories store successful past runs, and procedural memories capture proven strategies—all searchable and selectively exposed.

Learn like a new hire: Capture heuristics ('how we analyze'), then expose them at decision time.

Permission Boundaries: Set least-privilege scopes for all connectors and data access. Isolate sensitive data with explicit boundaries—agents should know what they can query and what requires human approval.

Verification Loops

Test as you go. Parallel checks produce evidence-backed artifacts.

Confidence‑Aware Execution: Frontier models surface confidence scores at planning and execution steps. Heavy obeys these signals—auto‑proceed when high, pause for approval when low.

Parallel Execution

Heavy lets agents run independent tool calls concurrently and switch to sequential when dependencies matter.

Throttle near token limits: Parallel burns context faster.

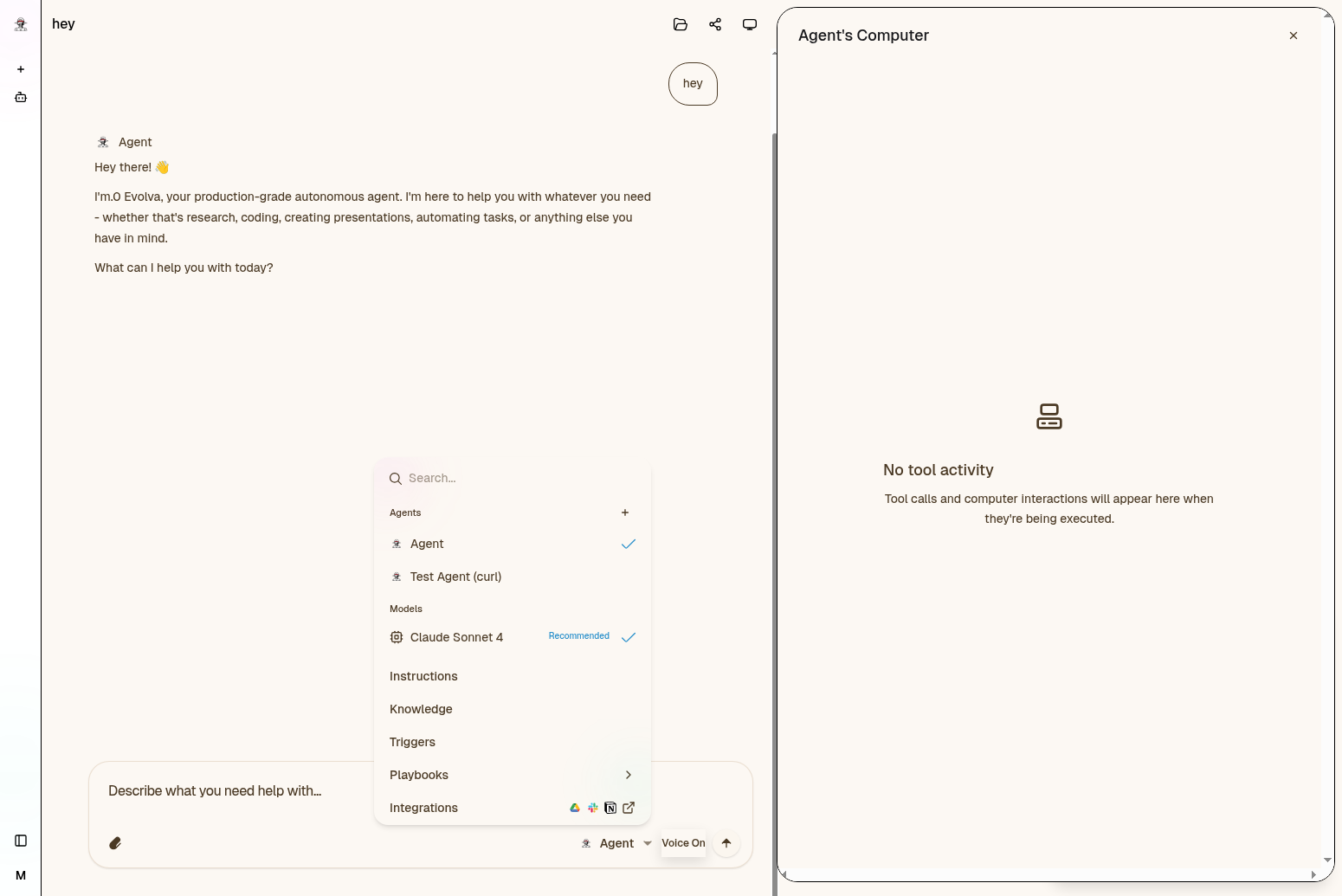

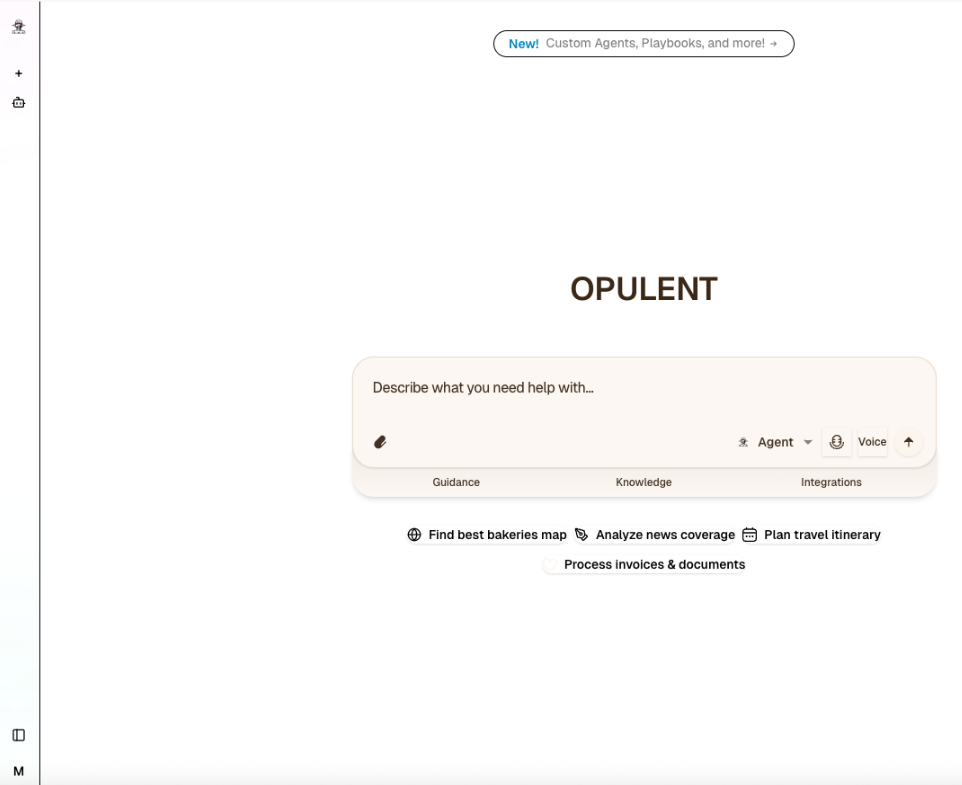

Interface Gallery

See Heavy in action: (1) Plan Preview (required) → approve; (2) PR review → comments in 5–10 min. Each example shows real micro-demos of key workflows.

Unified command surface

Multi-stage run timeline

Agent builder interface

Progressive guidance system

Integration marketplace

Credential management

Live query workspace

Cohort validation

Model economics

Artifact review panel

Query summary card

Document ingestion

Policy pack editor

Orchestration timeline

Guide Structure: Writing & Visual Best Practices

Show work, not chrome. Pair every claim with an artifact (diff, SQL, chart) and a 1-line 'why it's correct.' Captions must cite the evidence (file/line/test/run link).

Instruction & Session Best Practices

- Session Length: Keep runs under ~10 ACUs. Split long work into linked sessions. If progress stalls ~10–15 min, regenerate plan—don't keep patching a bad trajectory.

- First message checklist: requirements + acceptance tests/PR steps + 3–5 key files + open questions. Save as a reusable template in Knowledge.

- Voice OK: Explain tricky tasks verbally when faster. Attach transcript to the brief so it survives context rotation.

- Task Complexity Alignment: Ship a win today—one verifiable artifact, then templatize it. Choose tasks that agents can complete within a few hours with clear acceptance criteria.

Quickstart: Launch in 10 Minutes

Automated PR Review (GitHub Actions + API key)

- 1. Pick a 2-hour task (bugfix, refactor, CI fix).

- 2. Wire PR trigger (GitHub Actions + API key).

- 3. Provision context (repo index + 3 spec/issue links).

- 4. Plan Preview (required) → approve/edit before execution.

- 5. Gates: tests on; confidence ≥0.6 to auto‑comment; human approval to merge.

- 6. Run → review artifacts (diffs/tests) → promote.

First run with Plan Preview (required) and confidence‑gated merge. If plan looks off, regenerate plan (cheaper than mid‑run debugging).

Ship a win today: one verifiable artifact, then templatize it. When a task works, turn it into a playbook.

When Heavy Shines

Best yield: tasks an engineer can do in a few hours with clear acceptance.

Deploy Heavy for multi-stage tasks that need tools and deliver tangible outputs:

Ideal Scenarios:

- Targeted code refactors

- CI/CD debugging and migrations (e.g., Jenkins → GitHub Actions)

- Automated PR reviews with contextual analysis

- Cited research briefs

- Automated reports or decks

- Data extractions and analytics workflows

- Scripted operations

- Rapid prototyping when team bandwidth is limited

Solid Choices: Spikes that still ship an artifact (notes/PoC/table).

Avoid For: One-off Q&A, fuzzy ideation without criteria, or work blocked by missing permissions.

Operationalize wins: When a task works, templatize it as a playbook.

Build Your AI Data Analyst: Intelligent Tool Design

Design tools for how LLMs think—unify discovery → query → validation. Instead of multiple separate steps, Heavy provides unified operations like search_data() that handle discovery, execution, and validation in one call—returning only relevant information. Always return the SQL + sample + confidence.

MCP Integration: Use MCP to wire warehouses safely; agents don't handle raw creds and get schema/exec/format tools via a standard bridge.

Prerequisites: Prefer legible, versioned data (dbt). If not, map your current architecture and flows before first run. Create read-only DB users for production access.

What Makes Heavy's Approach Different

Traditional chat interfaces give you text responses. Heavy gives you verified artifacts with full provenance—every query shows the exact SQL, sample results, and confidence assessment in a structured tool view.

- Live Streaming: Watch queries execute in real-time with SSE streaming. See parameter extraction, schema validation, and result processing as they happen—no black box delays.

- Tool View Registry: Each database operation renders in a specialized component—SQL queries show syntax-highlighted code, result previews, and execution metadata. Web searches display sources with relevance scoring. File operations show diffs with context.

- Playback & Sharing: Every analytical session becomes a reviewable artifact. Share run links with stakeholders who can replay the entire investigation—see what questions were asked, which queries ran, and how conclusions were reached.

- Context Budgeting: Heavy automatically manages token allocation across prompt and completion based on model context windows. Large analytical contexts (300K+ tokens) get intelligently compressed while preserving critical information.

Why Tool Design Matters

- Use Natural Names:

customer_nameinstead ofcust_uuid_b4a2. Clear identifiers reduce errors significantly. - Combine Related Operations: One

get_customer_contextcall instead of multiple separate queries. - Flexible Detail Levels: Agents choose between quick summaries or comprehensive information based on their needs.

- Clear Organization: Distinct tool names prevent confusion when working with many data sources.

- Smart Results: Default to focused, relevant data that agents can expand when needed.

30-Minute Playbook: Analyst Setup

Start with the 30-minute playbook below; save the checklist as procedural memory.

- Activate MCP (read-only): Settings → MCP Marketplace → 'Databases' filter → choose PostgreSQL (or your DB). Fill POSTGRES_HOST / DATABASE / USER / PASSWORD / PORT. Click Test listing tools; if OK, Enable then Use MCP.

- Index repos with DeepWiki-class docs for code/ETL lineage Q&A.

- Design 'Question → SQL → Artifact (+SQL shown)' and always show final SQL. Consider parallel execution for independent queries but monitor context consumption.

- Layer gates: schema check, cohort check, sample table, citations. Add automated tests for calculations.

- Attach the verification checklist to the brief.

- Share run link in analytics channel; reviewers reply in-thread.

MCP Marketplace & Connection

Quick Setup: Settings → MCP Marketplace → filter to "Databases" → select your database type (PostgreSQL, MySQL, etc.)

Configure: Enter connection details (host, database, user, password, port). Use read-only credentials for production.

Test: Click "Test listing tools" to verify connection. Enable → Use MCP to start your first session.

Troubleshooting (quick box)

- network is unreachable → verify host/port/network path.

- connection refused → confirm pooler type/port; security group/firewall.

- auth failures → rotate read-only creds; re-enable MCP.

Knowledge & Macros

Create an Analytics Knowledge block and attach a macro (e.g., !analytics). Anyone can invoke it in a message; Heavy will load the full content automatically.

## Purpose:

How to query the warehouse (which tool/MCP).

## Guidelines:

- Get full schema (database://structure).

- Read analytics repo README.md overview.

- Read docs.yml model docs.

- Prefer mart models over int_/stg_.

- Prefer analytics schema over raw.

- If unsure, propose tables and ask for confirmation.

- If needed, use Python (staging) for light EDA (pandas/numpy) before final SQL.

## Output format:

- Always show the final SQL that produced the answer + the result.

- Include a playground link (Metabase/BI) labeled "Open in Playground."

- Include a small chart/table (markdown) when helpful.

- If a single number, surface it prominently with units/timeframe.

Verification Checklist (paste into briefs)

- Exact SQL query used for this artifact

- Source tables/columns plus trust rationale

- Filters (e.g., dates, cohorts) with reasoning

- Spot-check sample table (10-row sample)

- Confidence score, gaps, and next actions

- Link to the BI playground used to verify the figure

Real‑world analytics workflows

The analytics loop is inherently iterative: form a hypothesis, run a query, refine, visualize, and share. Heavy captures this as a durable run with artifacts you can verify and edit. Below are common patterns we see again and again.

Example: Morning Analytics Brief

Your prompt: "Give me a morning analytics brief: active users yesterday, top 3 conversion drops, and any anomalies in payment processing."

What you see (streaming in real-time):

- Schema Discovery Tool: Floating preview shows "Exploring analytics.daily_active_users, analytics.conversion_funnel, payments.transactions..." Parameters stream as the agent identifies relevant tables.

- SQL Execution Tool: Query appears with syntax highlighting. You see the exact WHERE clauses, JOINs, and GROUP BY logic before it runs. Status updates: "Validating query → Executing (2.3s) → Processing 1.2M rows → Complete"

- Result Verification: Tool view shows sample rows (10-row preview), aggregate metrics, and confidence assessment. Each claim links back to the exact query that produced it.

- Anomaly Detection Tool: Another query streams through—comparing yesterday's payment success rate vs. 7-day baseline. Result: "Payment gateway timeout rate increased 3.2× (0.8% → 2.6%). Sample affected transactions attached."

What you receive: A structured Plate report artifact with inline charts, exact SQL for each metric, confidence scores, and links to the BI playground where you can modify and re-run queries. Share the run link with your team—they see the full investigation trail, can replay it step-by-step, or fork it for their own analysis.

Level Up: Advanced Analytics

- Index repos with DeepWiki-class docs first: Ask 'why is column X computed this way?' with a link back to code lines. Navigate without reading every file.

- Map Warehouses: Catalog activity sources and joins; flag reliable tables with data lineage.

- Evolve Schemas: Suggest or add columns (e.g., ARR forecasts) with safe migrations.

- Spot Gaps Early: Surface un-migrated data or missing events before they escalate.

- Segment Deeply: Build conversion or retention views by industry, scale, and region—with transparent filters.

- Track Adoption: Benchmark pre/post-launch metrics (e.g., MCP usage).

- Project Ahead: Layer forecasts with explicit assumptions and confidence bands.

Pro Tip: Always document “Why this column?” inside artifacts. Lineage details dodge pitfalls stand-alone SQL copilots often miss.

Effective Instructions: Clear, Focused Briefs

Precise, well-structured instructions improve agent reliability. Use this proven template for consistent results:

**Goal:**

Your one-line desired outcome.

**Context:**

Key links, status quo, and what is *not* in scope.

**Inputs/Resources:**

URLs, files, APIs (scoped creds), prior artifacts.

**Deliverables:**

Formats (e.g., PR, MD, CSV, Deck) + drop locations.

**Acceptance Criteria:**

Pass/fail checks (schema match, citations required, etc.).

**Constraints:**

Time/budget limits, approved tools, and style rules.

**Milestones/Checkpoints:**

Review points (plan, draft) before the final push.

**Edge Cases/Fallbacks:**

Known tricky spots plus recovery plans.

**Review:**

Approval flow + key proofs (diffs, logs).VC Brief Templates: Tight, Evidence-Based

Validated against top VC playbooks (4Degrees, Affinity, DealRoom). Enforce narrow scopes and cross-verified sources.

1. Due Diligence Snapshot (Series A/B)

**Goal:** 1-page DD snapshot for <Company> at <Round>.

**Inputs:** Pitch deck, data room, public intel (site, filings, news, pricing), analyst notes.

**Deliverable:** docs/dd/<company>-<round>-snapshot.md — sections: Company, Market/TAM-SAM-SOM, Product, Traction, Team, Risks, Questions.

**Acceptance:** ≥6 cited sources (URLs); competitor matrix [Company | Positioning | Stage | Rev Est. | Customers]; 3 key risks; 5 diligence asks.

**Constraints:** 60 min cap; public data only; confidence per section.

**Milestones:** Outline → Sources → Draft → Polish.2. Market Sizing + Competitive Scan

**Goal:** Validate market size and map top 10 competitors for <Category> in <Region>.

**Inputs:** Market reports, vendor sites, pricing, funding news, analyst coverage.

**Deliverable:** docs/research/<category>-market-2025.md — TAM/SAM/SOM (method + math), two growth scenarios, competitor matrix (features/pricing/ICP), investment implications.

**Acceptance:** Show top-down and bottom-up math; ≥8 sources; no single-source claims; call out confidence and data gaps.

**Constraints:** 45 min; prioritize primary/recency.

**Milestones:** Method & assumptions → Data pull → Matrix → Write-up.3. Portfolio KPI Monitor (Pilot)

**Goal:** Stand up a CSV snapshot of monthly KPIs for 3 portfolio cos.

**Inputs:** Public/portfolio updates, newsletters, hiring pages, release notes.

**Deliverable:** data/portfolio-kpis.csv [Company, Month, ARR cue, Growth cue, Hiring cue, Churn cue, Notable events, SourceURL].

**Acceptance:** ≥3 months history each; ≥2 sources per row; README.md explaining heuristics; flag assumptions.

**Constraints:** 30 min; public signals only.

**Milestones:** Schema → 3-row sample → Full file.Evidence‑first: require URLs and note confidence; prefer triangulated public data over vendor marketing claims.

Prerequisites: Ready to Roll?

- Heavy account + workspace access

- Tailored integrations (data, repos, tools)

- Orchestration and workspace layers managed automatically (fast snapshots keep work reproducible between runs)

- API key configuration for integrations

Integrations: Power Your Agents

Wire MCP connectors with scoped keys and budgets; monitor in one place. For heavy lifts, preload workspace adapters (browser, code, file ops) so every action stays reproducible.

Foundation Pillars

- Secure Onboarding: Least-privilege keys in vaults with hard budgets.

- Explore Schemas: Expose tables and lineage for low-risk querying.

- Execute Robustly: Run SQL/APIs with retries and idempotency.

- Process Outputs: Generate polished visuals, tables, and artifacts.

- Share Seamlessly: Publish linked results with full audit diffs.

Connectors Overview

- Warehouse: Read-only SQL with auto-retries.

- Docs: Cited grounding snippets.

- Repos & Code Indexing: Sandboxed clones, PRs, and sophisticated semantic search tools—enable Q&A on any repository, documentation discovery, dependency mapping, and architecture analysis.

- MCP Marketplace: Datadog (monitoring), Sentry (error tracking), Figma (design context), Airtable (structured data), GitHub/GitLab APIs (automated PR reviews, CI/CD workflows).

- Browser/API: Throttled fetches with safeguards.

- Sheets: Budgeted read/write lanes.

- Dashboards: Embeddable charts for quick review.

Granular Permission Patterns

- Least-Privilege Scopes: Set specific permissions per connector—read-only database access, scoped API keys, bounded query patterns.

- Compliance Boundaries: Explicitly define what agents can query. Store boundaries in procedural memory for consistent enforcement.

- Automated PR Reviews: Connect GitHub/GitLab APIs to trigger agents on PR events—contextual code analysis with repository indexing tools.

- CI/CD Automation: Wire Jenkins, GitHub Actions, or GitLab CI for migration workflows, pipeline conversion, and deployment automation.

Memory & Knowledge: Smart Context Management

Heavy uses four operations to manage context intelligently: Write, Select, Compress, Isolate. Instead of overwhelming agents with information, Heavy strategically controls what they see and when.

- Write Context: Save important information strategically—session state, successful patterns, and proven approaches.

- Select Context: Load only what's relevant for the current task, not everything available.

- Compress Context: Summarize automatically when space gets tight, keeping essential details clear.

- Isolate Context: Keep separate tasks independent, preventing confusion and cross-contamination.

Why This Matters: Poor context management causes agents to fail. Heavy's intelligent approach prevents these failures through strategic information control.

Builders: Design Once, Run Continuously

Transform repetitive work into automated workflows. Heavy's builder interface lets you design agents that run on demand or trigger automatically—responding to code changes, data updates, scheduled times, or business events.

Agent Builder: Your AI Workforce

Create specialized agents for specific tasks—each with its own tools, policies, and validation rules. Think of agents as team members who handle focused responsibilities: one for data analysis, another for code reviews, a third for customer research.

- Interactive Planning: Preview the plan (files, findings) before autonomous run. This front-loads context understanding and reduces mid-execution surprises.

- Natural Language Setup: Describe what you want your agent to do; the builder generates the configuration.

- Manual Mode: For precise control, configure tools, policies, and validation gates directly.

- Tool Assignment: Give each agent only the tools it needs—analytics, code analysis, web search, or database access.

- Policy Integration: Embed guardrails and constraints directly into agent behavior.

Concurrent Agent Guidance

Core Principle: Prefer one agent with persistent context; add helpers sparingly and summarize across boundaries to prevent fragmentation.

- Shared Context Principle: When spawning multiple agents, ensure they share context or receive comprehensive summaries before delegation. Avoid fragmented decision-making across isolated agents—each agent needs enough context to make coherent decisions.

- Context Consumption Trade-offs: Multiple agents can run simultaneously for parallelism, but monitor aggregate context usage. Use Heavy's context management to balance speed (parallel execution) vs. efficiency (sequential with shared state).

- Complex Workflow Patterns: For multi-step tasks (e.g., CI/CD migrations), use workflow builder to orchestrate: read documentation agent → conversion agent → testing agent → validation agent. Each step receives summarized context from previous stages.

Workflow Builder: Orchestrate Complex Tasks

String multiple agents together into workflows that handle multi-step processes. Each workflow follows clear stages—Plan → Research → Act → Verify → Deliver—with checkpoints and human review gates where needed.

- Typed Steps: Each stage knows what it receives and what it must produce.

- Validation Gates: Automatic checks ensure quality before moving forward.

- Artifact Outputs: Every workflow produces reviewable results—diffs, reports, datasets, or recommendations.

- Progress Tracking: Visual timelines show exactly where work stands.

Triggers: Work While You Sleep

Set up agents to respond to real-world signals without manual starts. Start with three high-ROI triggers: PR-opened, build-failed, dashboard-updated. Heavy supports multiple trigger types:

- Schedule Triggers: Daily market summaries, weekly performance reports, monthly audits. Use for model retraining, periodic codebase indexing, or recurring evaluation tasks.

- Event Triggers: PR opened/merged (automated code reviews), build failures (root cause analysis), metric thresholds crossed, customer tickets escalated. Connect GitHub/GitLab APIs, CI/CD webhooks, monitoring systems.

- Data Change Triggers: Updated dashboard, new database records, changed configurations. Trigger data validation, anomaly detection, or report generation.

- Calendar Integration: Pre-meeting briefs, post-meeting summaries, deadline reminders.

- Webhook Triggers: External system events, API callbacks, third-party notifications. Wire Slack messages, Jira ticket updates, or custom application events.

Event-Based Automation Patterns

- PR Review Automation: Trigger agent when PR opens via GitHub Actions—agent performs contextual code analysis using repository indexing, checks for security issues, validates tests, and posts review comments.

- CI/CD Pipeline Monitoring: Connect build failure events to diagnostic agents—automatically analyze logs, identify root cause, propose fixes based on recent commits and code context.

- Data Quality Monitoring: Trigger on database changes or dashboard updates—validate data consistency, check for anomalies, alert on threshold violations with contextual explanations.

Real Examples

Morning Intelligence Brief

Trigger: Every weekday at 7 AM

Workflow: Research agent → Analysis agent → Summary agent

Output: Personalized brief with competitor moves, market trends, and today's priorities—delivered before you start work.

Automated Code Review

Trigger: New pull request opened

Workflow: Analysis agent → Security check → Test coverage agent

Output: Detailed review with security findings, test recommendations, and code quality metrics—ready before human review.

Customer Churn Prevention

Trigger: Usage drops below threshold

Workflow: Data agent → Analysis agent → Action agent

Output: Risk assessment with historical patterns, suggested interventions, and draft outreach—flagged for your CS team.

Scheduled Runs: Always Current

Automate freshness—daily briefs or weekly fixes—via isolated, budgeted triggers with approvals. Outputs land as auditable artifacts, routed to PRs or emails for team visibility.

Supports cron/intervals, webhooks, and guardrails to block mishaps before promotion.

Your First Run: Step-by-Step Guide

Follow these steps to launch your first successful agent workflow.

1. Set Goals + Criteria

Craft a tight paragraph with deliverables and metrics. Focus on one artifact—diff, report, deck, or dataset.

Confidence Thresholds: Specify decision criteria based on agent confidence—e.g., "proceed only if agent confidence ≥ 0.6, otherwise pause for human review." If confidence < threshold, auto-route to review with artifacts attached.

Explicit Constraints: Define boundaries clearly—approved data sources, allowed operations, required validation checks. Store constraints in procedural memory for consistent enforcement across runs.

Enforcement: Plans must include tests/checks that prove acceptance or the run pauses for revision.

2. Compose the Workflow

Lay out Plan → Tool Use → Verify → Learn. Attach the right tools and policies; keep every step small and verifiable.

Plan Preview is required: Edit before any tool call. Review and refine—front-loading context understanding reduces mid-execution surprises and ensures alignment with your requirements.

Context-Aware Tool Selection: Use sophisticated repository indexing for semantic search and architecture analysis. Layer in relevant documentation, verification checklists, and domain heuristics before agents begin work. This strategic context provisioning improves decision quality throughout execution.

3. Add Gates & Guardrails

Slot schema checks, policy gates, and merge thresholds. Prefer dry-run lanes until confidence climbs.

Automated Testing Integration: Include unit tests and continuous integration checks as validation gates. Agents should write and execute tests as they work—creating feedback loops that catch errors before they cascade. Test failures trigger automatic rollback or human review.

Confidence-Based Branching: Configure workflows to branch based on agent confidence scores—high-confidence paths proceed automatically, low-confidence paths pause for human approval. Example: database modifications require ≥ 0.8 confidence or explicit approval.

Batch/Parallel Edits: Enable batch/parallel edits only when steps are independent; otherwise run sequential with checks.

Dry-Run Validation: Use non-destructive validation lanes for first executions—test against staging environments, verify outputs without committing, and promote only after manual review. Graduate to production automation as confidence patterns emerge.

4. Run & Review

Execute once, then audit the trace. Use Computer Views for diffs, logs, visuals, and previews.

5. Iterate & Promote

Log misses as updated criteria, adjust prompts or policies, and rerun through a canary gate before promotion.

Evolve Policies: Observe → Reflect → Mutate → Select

Evolve prompts like code: mine traces for rules, tweak surgically, and A/B test via GEPA-style reflective loops for structured feedback.

Breakdown:

- Reflect: Distill fixes for format issues, evidence gaps, or tool misuse.

- Mutate: Apply targeted edits—spec tweaks, domain constants, or search-before-solve rules.

- Select: Prune, then A/B inside the workflows teams already touch.

Observe (Traces) ─→ Reflect (Rules) ─→ Mutate (Edits) ─→ Select (A/B) ─┐ │ │ └──────────────┘

In Practice: Test small, log misses as criteria, and ship updates through canary gates.

Operate Durably: Verification Loops That Compound

The Generation ↔ Verification Cycle

Production agents need tight feedback loops: generate → verify → iterate. Heavy accelerates this cycle through parallel verification, self-testing, and artifact-based evidence. Rule: If tests/linters fail, Regenerate Plan and minimize diff on retry.

- Self-Testing at Each Step: Agents write validation scripts as they work—catching errors before they compound into context poisoning.

- Parallel Validation: Run tests, linters, and checks concurrently; gather evidence without blocking progress.

- Batch Edits + Multi-Action Speed: Batch edits and multi-action speed up refactors; keep guards on.

- Artifact-Based Evidence: Every change produces diffs, logs, screenshots—linked proof for human review.

- Eval-Driven Improvement: Held-out test sets measure real performance; agent-generated feedback identifies tool improvements.

Context-Aware Orchestration

Like Sonnet 4.5's “context anxiety,” Heavy agents monitor their own token budgets—but with guardrails to prevent premature wrap-up:

- Proactive Summarization: At 80% capacity, compress tool outputs; at 90%, snapshot critical state to scratchpad.

- Parallel vs Sequential: Early in context: maximize parallelism for speed. Late in context: focus on finishing critical path.

- Token Budget Visibility: Expose accurate remaining capacity to prevent “running out sooner than expected” behaviors.

Production KPIs

Measure what matters for agents that finish work:

- Completion Rate: % of tasks finished without manual intervention

- Context Efficiency: Tokens per task milestone; tool calls per success

- Verification Pass Rate: % of artifacts passing automated checks first try

- Plan Re-approval Rate: % of runs where editing the plan avoided downstream failures

- Confidence Match Rate: Correlation between agent confidence scores and actual success. Track across runs—high match rates indicate reliable self-assessment, enabling safer automation. Low match rates signal need for additional context, verification gates, or human-in-loop decisions.

- Error Recovery Time: Turns from hallucination to correction

- Hallucination Caps: Hard limits + steering to prevent context poisoning

Confidence Calibration: Frontier models increasingly expose confidence scores, but calibration varies by model and domain. Monitor Confidence Match Rate over time—if agents consistently underestimate confidence, you can automate more aggressively. If they overestimate, add verification layers or require higher thresholds (e.g., ≥ 0.8 instead of ≥ 0.6). Production patterns show well-calibrated confidence enables 40-60% automation while maintaining quality standards.

| Mechanism | Reliability | Safety |

|---|---|---|

| Retries / Backoff | Orchestrator retry policies | Non‑retryables for unsafe ops |

| Isolation | Separate activity queues | Dedicated workspace sandbox per run |

| Budgets | Timeouts + heartbeats | Cost/time caps; approvals |

| Auditability | Workflow event history | Artifact logs + PR diffs |

Views: Inspect & Ship Confidently

Capture artifacts with premium previews—diffs, logs, tables, images, decks—so every edit maps to evidence and reviews fly by.

FAQ: Quick Answers

Common questions about getting started with Opulent OS Heavy, from task phrasing to troubleshooting.

References

- GEPA reflective loop tutorial: dspy.ai/tutorials/gepa_facilitysupportanalyzer

- Workspace enablement stack (Daytona deep dive): daytona.io/…/ai-enablement-stack

- Orchestration retry policies (Temporal docs): docs.temporal.io/…/retry-policies

- Workflow event history (Temporal docs): docs.temporal.io/workflow-execution/event

- Industry best practices for AI agents: Insights from leading AI engineering platforms including production deployment patterns, interactive planning workflows, and automated PR review systems.

- Data analytics workflows: Field-tested patterns from enterprise AI data analysis implementations including MCP database integrations, knowledge macro systems, and verification protocols.

- VC — Due diligence checklist: 4degrees.ai/…/vc-due-diligence-checklist

- VC — Checklist (alt): affinity.co/…/due-diligence-checklist-for-venture-capital

- VC — Playbook template: dealroom.net/…/venture-capital-due-diligence-checklist

- Market sizing — TAM/SAM/SOM: hubspot.com/…/tam-sam-som

Wrap Up: The Era of Agents That Finish

Context engineering is the #1 job of engineers building agents. Heavy pairs deterministic orchestration with intelligent tool design, strategic memory management, and parallel verification—so workflows adapt to context pressure and finish with auditable artifacts.

We're past the era of demos that work once. Production agents must handle multi-hour tasks, manage complex context, select tools wisely, and verify their own work. Heavy provides the patterns, infrastructure, and learned workflows to make this real.